Get Recallium running in under 2 minutes.

Recallium is an AI memory and project intelligence server that gives your IDE agents persistent memory. It uses the Model Context Protocol (MCP) to store, search, and reason about your code, decisions, and project knowledge—creating a digital twin that grows smarter over time.

| Feature | What You Get |

|---|---|

| Persistent Memory | Decisions, patterns, learnings saved across sessions |

| Cross-Project Intelligence | Lessons learned once → applied everywhere |

| Document Knowledge Base | Upload PDFs, specs → AI understands instantly |

| 16 MCP Tools | Full toolkit for IDE agents |

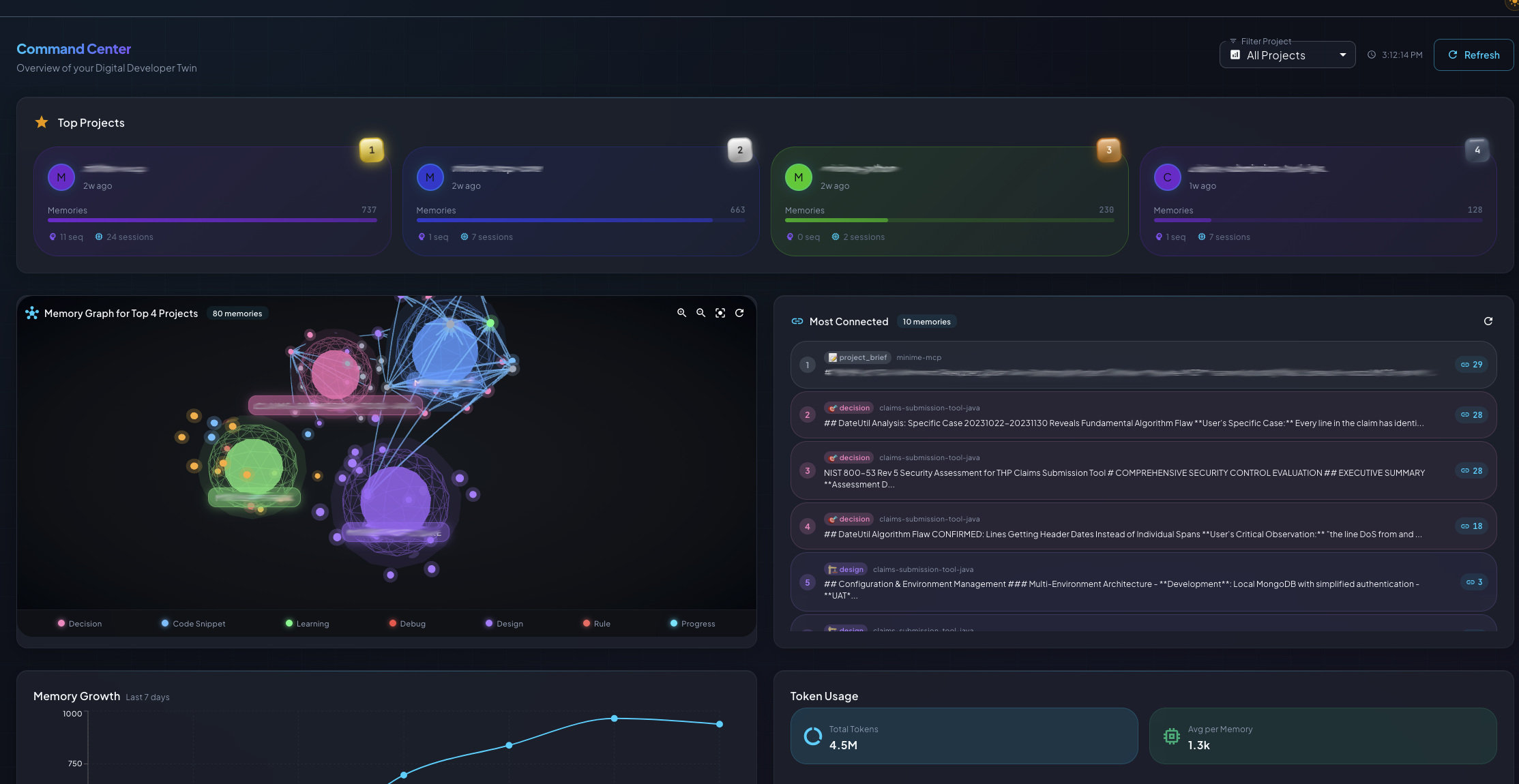

| Web Dashboard | 18-page UI for management and insights |

Want to run completely free and private? Use Ollama + built-in embeddings:

LLM: Ollama (Llama 3, Mistral, Qwen - runs locally) Embeddings: GTE-Large (built-in, no API needed) Cost: $0

Just install Ollama, pull a model, and select it in the Setup Wizard. Your data never leaves your machine.

| Connection Type | IDEs | Setup |

|---|---|---|

| HTTP Direct (recommended) | Cursor, VS Code, Claude Code, Claude Desktop, Windsurf, Roo Code, Visual Studio 2022 | Just add URL to config |

| npm Client (stdio→HTTP bridge) | Zed, JetBrains, Cline, BoltAI, Augment Code, Warp, Amazon Q | Install npm install -g recallium first |

Install Docker for your platform.

Install Ollama:

# macOS brew install ollama # Linux curl -fsSL https://ollama.ai/install.sh | sh # Windows # Download from https://ollama.ai/download

Start Ollama:

ollama serve

# Required for insights (or use OpenAI/Anthropic) ollama pull qwen2.5-coder:7b

Recallium now uses HTTP transport — most modern IDEs connect directly to the Docker container:

No npm client needed (HTTP or Extension): Cursor, VS Code, Claude Code, Claude Desktop, Windsurf, and 10+ other IDEs

npm client required (STDIO→HTTP bridge): Zed, JetBrains, Cline, BoltAI, and other command-only IDEs

If your IDE requires the npm client (see IDE Integration section below), install it:

npm install -g recallium

cd install chmod +x start-recallium.sh ./start-recallium.sh

What the script does:

recallium.env existsThat's it! Visit http://localhost:9001 to complete setup.

cd install chmod +x start-recallium.sh ./start-recallium.sh

Visit http://localhost:9001 to complete setup.

[::]) for Safari compatibility on macOS. If you encounter port binding errors on Linux, your Docker daemon may not have IPv6 enabled. Two options:# Edit /etc/docker/daemon.json

{ "ipv6": true }

# Then: sudo systemctl restart docker# Change: -p "[::]:${PORT}:9000"

# To: -p "${PORT}:9000"Windows requires additional Ollama configuration for Docker connectivity.

Step 1: Configure Ollama Environment Variable (one-time setup)

OLLAMA_HOST0.0.0.0:11434Step 2: Add Windows Firewall Rule (one-time setup)

Open Command Prompt or PowerShell as Administrator and run:

netsh advfirewall firewall add rule name="Ollama" dir=in action=allow program="C:\Users\<YOUR_USERNAME>\AppData\Local\Programs\Ollama\ollama.exe" enable=yes profile=private

Replace <YOUR_USERNAME> with your Windows username.

Step 3: Start Recallium

cd install start-recallium.bat

What the script does:

recallium.env existsOLLAMA_HOST/OLLAMA_BASE_URL in recallium.envVisit http://localhost:9001 to complete setup.

Use docker-compose if you want more control or need to customize the setup.

cd install docker compose --env-file recallium.env pull # Download latest image docker compose --env-file recallium.env up -d # Start container

start-recallium.bat, you must manually update recallium.env with your IP address:OLLAMA_HOST=http://YOUR_IP:11434 OLLAMA_BASE_URL=http://YOUR_IP:11434

ipconfig | findstr "IPv4"Advantages of docker-compose:

docker-compose.yml)Default ports work for most users. Only change if you have conflicts.

Edit recallium.env before starting:

HOST_UI_PORT=9001 # Web UI: http://localhost:9001 HOST_API_PORT=8001 # MCP API: http://localhost:8001 HOST_POSTGRES_PORT=5433 # PostgreSQL: localhost:5433 VOLUME_NAME=recallium-v1 # Data volume name

Port mapping:

| Your Machine | Container | Service |

|---|---|---|

| HOST_UI_PORT (9001) | 9000 | Web UI |

| HOST_API_PORT (8001) | 8000 | MCP API |

| HOST_POSTGRES_PORT (5433) | 5432 | PostgreSQL |

Important: If you change HOST_API_PORT, update your IDE's MCP configuration to match.

http://localhost:9001 (or your configured HOST_UI_PORT)http://localhost:8001 (or your configured HOST_API_PORT)http://localhost:8001/health# 1. Check container is running

docker ps -f name=recallium

# 2. Verify health endpoint

curl http://localhost:8001/health

# Expected: {"status":"healthy",...}

# 3. Check MCP tools are available

curl http://localhost:8001/mcp/status

# Expected: List of 16 available tools

# 4. Open the Web UI

open http://localhost:9001 # macOS

# or visit http://localhost:9001 in your browserIf all checks pass, proceed to the Setup Wizard!

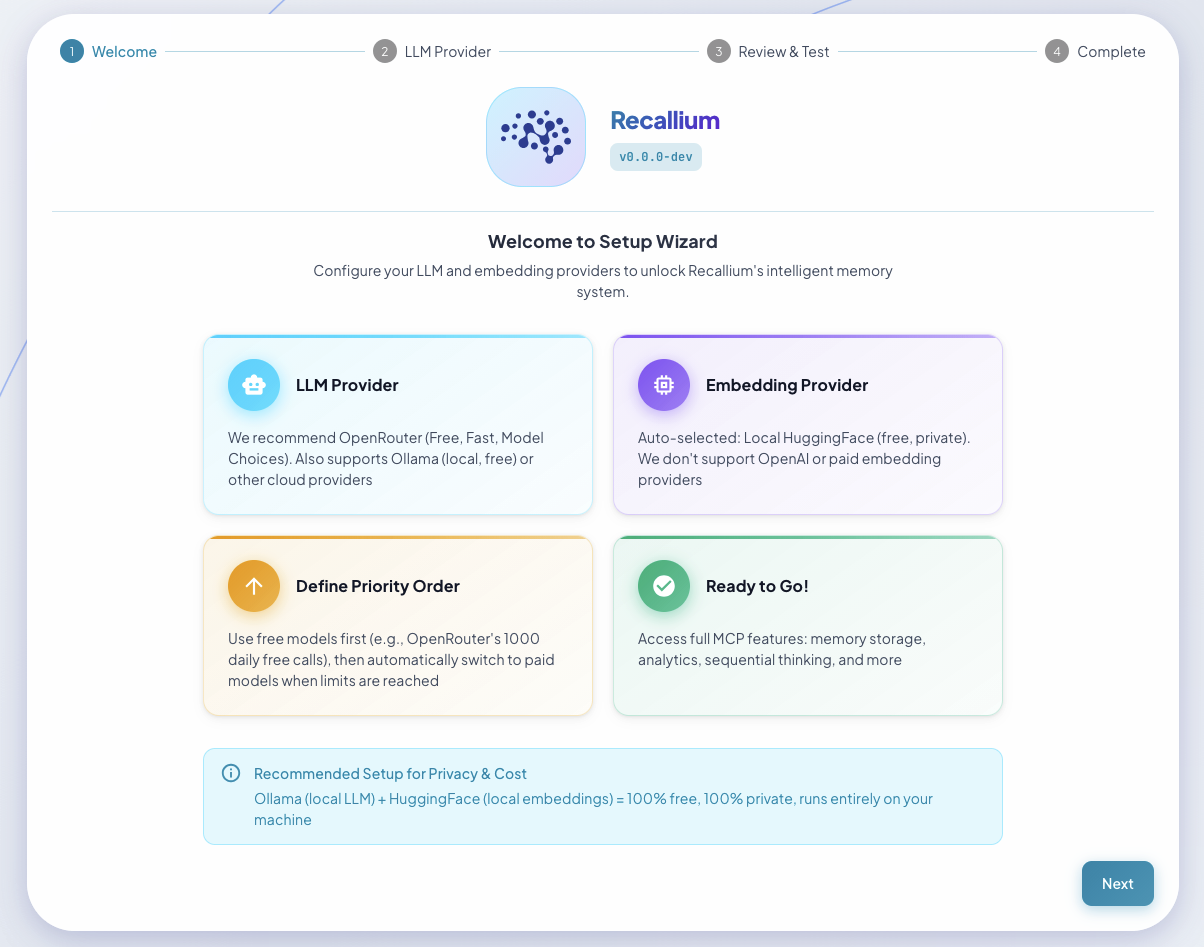

On first launch, visit http://localhost:9001 to complete the setup wizard:

The Setup Wizard guides you through initial configuration

Select from 5 supported LLM providers

Recallium works with any LLM provider—use what you already have:

| Provider | Models | Notes |

|---|---|---|

| Anthropic | Claude 3.5 Sonnet, Claude 3 Opus/Sonnet/Haiku | Recommended for best results |

| OpenAI | GPT-4o, GPT-4 Turbo, GPT-3.5 Turbo | Function calling, streaming |

| Google Gemini | Gemini 1.5 Pro, Gemini 1.5 Flash | Multi-modal support |

| Ollama | Llama 3, Mistral, Qwen, any local model | Free, runs locally |

| OpenRouter | 100+ models via single API | Access any model |

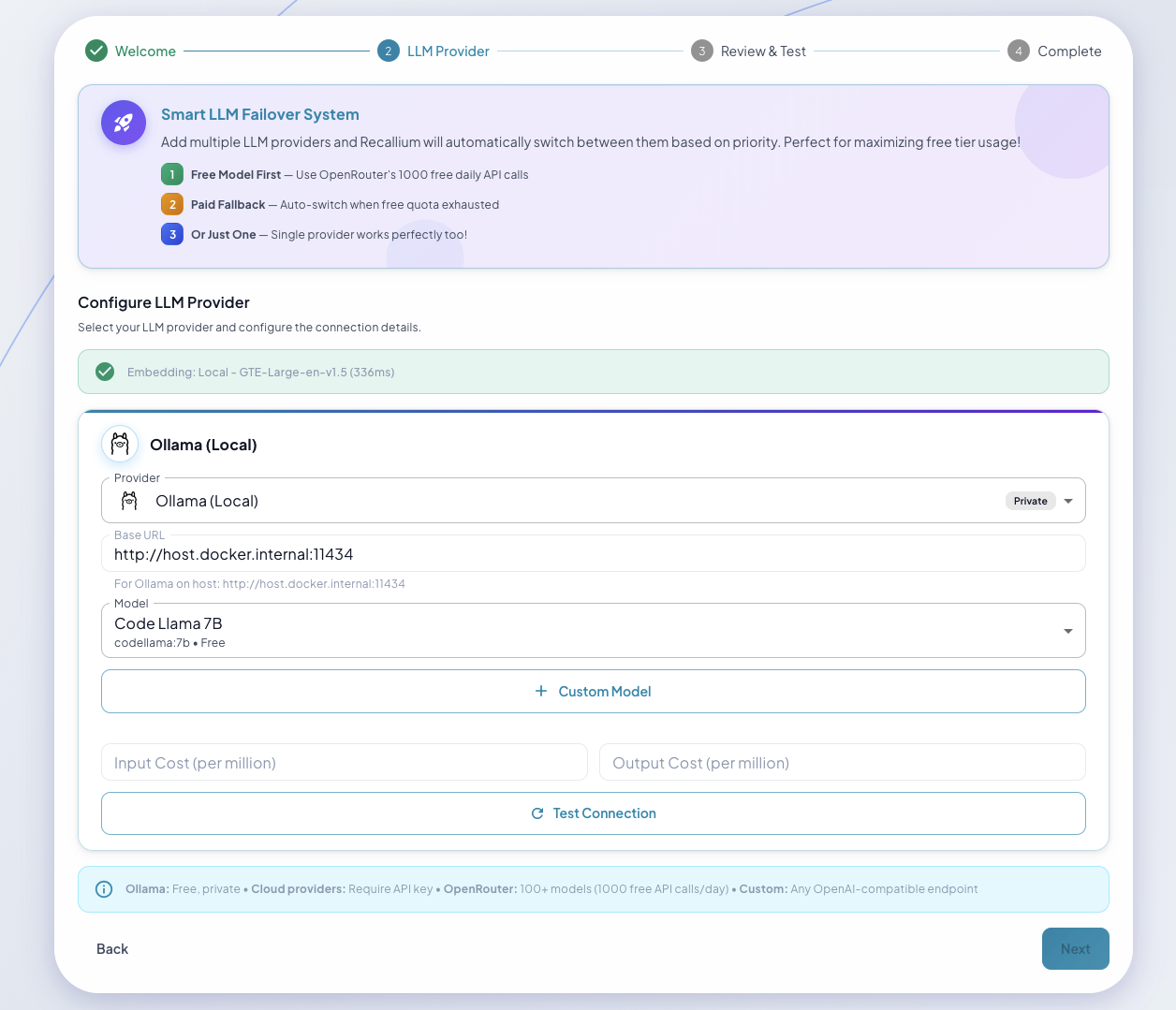

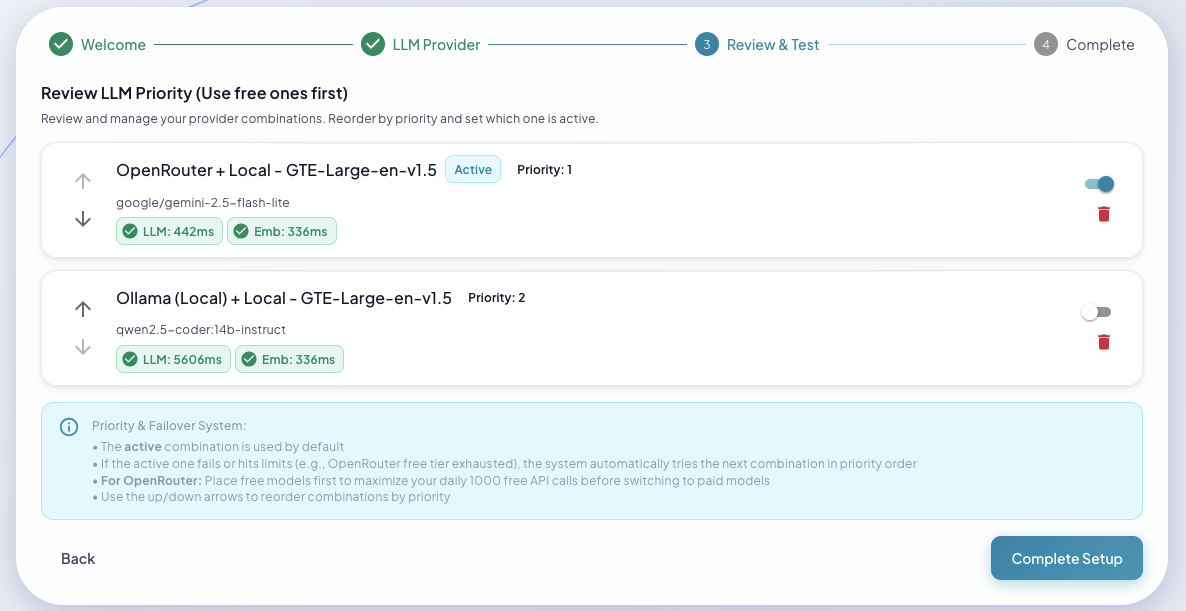

The setup wizard lets you:

Configure provider priority and automatic failover

Once configured, the MCP tools become available to all connected IDEs.

After setup: Your dashboard shows system stats, recent activity, and quick access to all features

Want to run completely free and private?

LLM: Ollama (local models like Llama 3, Mistral) Embeddings: GTE-Large (built-in, runs locally)

Just select Ollama in the setup wizard and ensure Ollama is running locally.

The recallium.env file contains all configuration options. Key settings:

POSTGRES_PASSWORD=recallium_password # Change in production!

Configure via Setup Wizard at http://localhost:9001

API keys are securely vaulted inside your Docker container—never stored in plain text files.

| Provider | Models | Notes |

|---|---|---|

| Ollama | Llama 3, Mistral, Qwen, any local model | Free, local - Default |

| Anthropic | Claude 3.5 Sonnet, Claude 3 Opus/Sonnet/Haiku | Recommended for best results |

| OpenAI | GPT-4o, GPT-4 Turbo, GPT-3.5 Turbo | Function calling, streaming |

| Google Gemini | Gemini 1.5 Pro, Gemini 1.5 Flash | Multi-modal support |

| OpenRouter | 100+ models via single API | Access any model |

The Setup Wizard lets you:

HOST_UI_PORT=9001 # Access UI on your machine HOST_API_PORT=8001 # Access MCP API on your machine HOST_POSTGRES_PORT=5433 # Access PostgreSQL on your machine

See recallium.env for all available options with detailed inline documentation.

All configuration is managed through recallium.env (single source of truth). Common customizations:

# Edit install/recallium.env LLM_MODEL=llama3.2:3b # Smaller, faster # or LLM_MODEL=gpt-oss:20b # Larger, more accurate

# Edit install/recallium.env CHUNK_SIZE_TOKENS=400 # Safe with 22% margin (recommended) CHUNK_SIZE_TOKENS=450 # Moderate margin

# Edit install/recallium.env BATCH_SIZE=20 # Process more memories at once MAX_CONCURRENT=10 # More parallel operations QUEUE_WORKERS=3 # More background workers

Use the Setup Wizard at http://localhost:9001 → Providers

No container restart required—changes take effect immediately.

# Edit install/recallium.env ENABLE_PATTERN_MATCHING=false # Skip pattern detection ENABLE_UNIFIED_INSIGHTS=false # Disable insights processing REAL_TIME_PROCESSING=false # Queue for batch processing

After editing recallium.env, restart the container:

cd install docker compose down docker compose --env-file recallium.env up -d

Recallium supports two connection methods:

These IDEs connect directly to http://localhost:8001/mcp:

These IDEs only support command-based connections and need the npm client as a stdio→HTTP bridge:

First, install the npm client:

npm install -g @recallium/mcp-client

Note: If you changed HOST_API_PORT in recallium.env from the default 8001, update the URL in your IDE config accordingly.

# View logs docker compose --env-file recallium.env logs -f # Stop docker compose down # Restart docker compose --env-file recallium.env restart # Update to latest version docker compose --env-file recallium.env pull docker compose --env-file recallium.env up -d # Reset everything (deletes all data!) docker compose down -v

If you don't want to manually invoke Recallium tools every time, you can define rules in your IDE to automatically use Recallium for specific tasks:

Cursor Settings > Rules section.clauderc or CLAUDE.md file.windsurfrules fileExample Rule:

Always use recallium MCP tools when working with this codebase. Specifically: - Use store_memory to capture implementation decisions, learnings, and code context - Use search_memories to find past decisions and context before making changes - Use get_rules at the start of each session to load project behavioral guidelines - Link memories to files using related_files parameter for better code searchability

From then on, your AI assistant will automatically use Recallium's persistent memory without you having to explicitly request it.

You can test and debug your Recallium MCP connection using the official MCP Inspector:

# Test HTTP connection npx @modelcontextprotocol/inspector http://localhost:8001/mcp # Test npm client (stdio→HTTP bridge) npx @modelcontextprotocol/inspector npx -y recallium-mcp

The inspector provides a web interface to:

Once your IDE is connected, try these commands with your AI assistant:

"recallium" → Magic summon: loads all your project context in one call "Store a memory: We decided to use PostgreSQL because..." → Saves your decision with auto-tagging "Search my memories about authentication" → Finds past decisions, patterns, learnings "What was I working on last week?" → Session recap with recent activity "Get insights about my database patterns" → Cross-project pattern analysis

| Capability | Example |

|---|---|

| Store memories | Decisions, patterns, learnings automatically preserved |

| Search across projects | Find past context instantly |

| Get insights | Pattern analysis across your work |

| Link projects | Share knowledge between related projects |

| Upload documents | PDFs, docs become searchable knowledge |

| Manage tasks | Track TODOs with linked memories |

| Structured thinking | Document complex problem-solving |